What Is a Tokenomics Data Room? (Why Investors Expect One)

A tokenomics data room packages token design, financial models, and compliance docs into one investor-ready package. Here's what goes inside and why.

A tokenomics data room is a structured documentation package that proves your token model is sound to investors, regulators, and exchange listing teams. It contains mechanism design rationale, financial models including Monte Carlo simulations, supply-demand projections, compliance artifacts, and audit reports — organized to institutional standards so every stakeholder can complete due diligence without chasing you for documents.

#What Is a Tokenomics Data Room?

Think of it like the due diligence room in traditional M&A, but purpose-built for token economies. Instead of cap tables and P&L statements, the core documents are token design specifications, unlock schedules, circulating supply projections, and compliance summaries that cover every angle an investor or regulator will examine.

The difference between a tokenomics data room and a pitch deck is the difference between "trust us, the model works" and showing the math. According to Blockchain App Factory's 2026 Token Launch Guide, exchange-facing data room essentials now include smart contract audit reports, legal opinions, tokenomics documentation, and governance structures as baseline requirements.

The stakes are higher than ever. Crypto venture capital deployment exceeded $25 billion in 2025, a 73% increase from 2024 (Source: DL News). With that much capital in play, investors aren't guessing anymore. They're running due diligence processes that rival traditional tech VC — and the data room is where that process starts.

A complete data room answers every question before it's asked. Your legal team has the compliance framework. Your developers have the technical specs. Your investors have the financial models. No one's waiting on you to send a follow-up document.

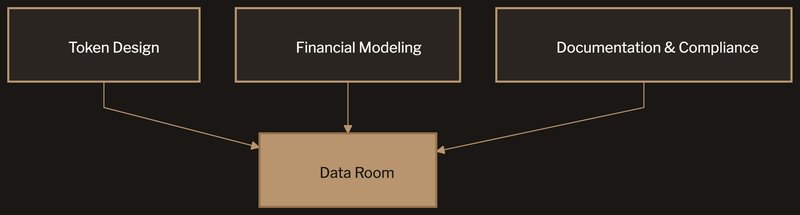

#The Three Pillars of a Data Room

Every complete tokenomics data room is built on what we call the Three Pillars Framework: token design, financial modeling, and documentation and compliance. Miss one and sophisticated investors will find the gaps before you get to the term sheet.

#Token Design

This is the mechanism design work — how your token actually functions within the ecosystem and how value flows through the system. A solid token design section covers four areas that investors will dissect.

Supply architecture. Fixed, inflationary, deflationary, or hybrid models. Total supply, circulating supply projections, and the reasoning behind those numbers. In 2026, leading projects use decaying issuance models where token creation decreases over time, similar to Bitcoin's halving mechanism (Source: Weex). Your data room needs to show why your supply model fits your specific business.

Distribution plan. Who gets what, when, and under what conditions. Allocation percentages with full vesting schedules, cliff periods, and unlock curves mapped month by month. This section makes or breaks investor confidence — it reveals whether your incentives are aligned with long-term value creation or short-term extraction.

Value accrual mechanics. How the token captures and retains value over time. Fee mechanisms, burn mechanics, staking yields, and governance rights — each mapped to sustainable revenue drivers. Investors want to see exactly how protocol revenue flows to token holders and through what mechanisms.

Utility mapping. Every use case for the token within the ecosystem, with clear distinctions between primary and secondary utility. Investors want to see real utility driving demand, not circular tokenomics where the token's only purpose is to be traded.

#Financial Modeling

This is where revenue-first design comes to life. Your financial models need to demonstrate the token economy is sustainable — not just at launch, but three to five years out. This pillar is where most projects either earn investor confidence or lose it permanently.

Monte Carlo simulations are the backbone of credible financial modeling for token economies. Rather than presenting a single "expected" scenario, Monte Carlo methods run thousands of randomized simulations across your key variables — user growth, token velocity, market conditions, fee revenue — to produce probability distributions of outcomes.

This approach shows investors not just what might happen, but the full range of possible outcomes and at what confidence levels. A Monte Carlo output that shows your token maintains value at the 10th percentile scenario is far more convincing than a single-line projection showing everything goes perfectly.

Key models in the data room include supply-demand projections mapped against demand drivers across conservative, moderate, and aggressive scenarios. Revenue modeling shows how the protocol generates fees, where those fees flow, and what happens to them under different market conditions.

Stress testing goes deeper than scenarios. What happens if your user base grows 50% slower than expected? What if a major market downturn hits in month six? What if your primary revenue source drops by 70%? These questions need quantitative answers, not qualitative hand-waving.

Sensitivity analysis identifies which variables have the largest impact on token value. Investors want to know exactly where the risk lives — and Monte Carlo output makes that visible through clear probability distributions rather than single-point estimates.

#Documentation and Compliance

The third pillar covers everything your legal team, regulators, and technical stakeholders need to sign off. According to Antier Solutions' compliance infrastructure guide, compliant token infrastructure in 2026 requires regulatory-aware identity layers with KYC/AML and secure custody using MPC key management.

Legal opinions cover token classification analysis, regulatory framework assessment, and jurisdictional considerations. This isn't boilerplate — it needs to address your specific token design and distribution model under the laws that apply to your target markets.

Compliance framework documents how your token design accounts for securities regulations, KYC/AML requirements, and transfer restrictions. With MiCA now in force across the EU and global frameworks tightening, this section has become non-negotiable for any project touching institutional capital.

Technical specifications detail smart contract architecture, token standard selection, and the implementation roadmap. Developers and technical auditors need enough detail to verify your mechanism design is actually implementable as described.

Audit reports from third-party firms reviewing your mechanism design, financial models, or smart contracts round out the compliance pillar. Our data room checklist provides the complete inventory of what belongs in each category.

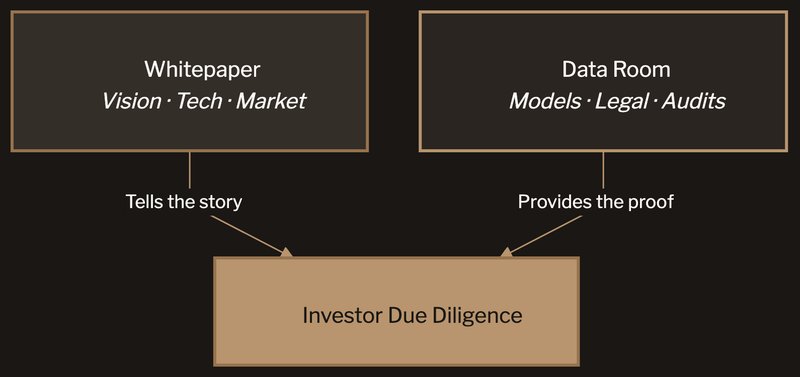

#Data Room vs. Whitepaper

We hear this constantly: "We already have a whitepaper. Do we really need a data room?" Yes. And here's why they serve different purposes.

A whitepaper tells the story. It explains your vision, technology, and market opportunity. It's designed to generate interest and attract potential investors, community members, and partners into your ecosystem.

A data room provides the proof. It's the evidence package that moves people from "interested" to "invested." Tokenomics strategy documentation explains supply logic, distribution, and economic models at a conceptual level. The data room goes further with Monte Carlo stress tests, legal analysis, and audited models that verify every claim the whitepaper makes (Source: Weex).

The whitepaper says "our token model creates sustainable value through fee redistribution." The data room shows the fee model spreadsheet with five-year projections, the sensitivity analysis showing which variables matter most, and the legal opinion confirming the fee structure doesn't create securities issues.

Projects that only have a whitepaper leave investors to fill in gaps with assumptions. Projects with a complete data room remove that uncertainty. We've seen fundraise timelines compress by 40-60% when the full documentation package is available from day one.

#What Investors Actually Look For

"We've seen unprecedented improvements in underlying fundamentals. We expect adoption of stablecoins and other tokenised assets to continue accelerating in 2026." — Mike Giampapa, General Partner, Galaxy Ventures (DL News)

That focus on fundamentals shows up in how investors evaluate deals. As noted in Blockchain App Factory's research, premium exchanges now conduct deep risk assessments covering regulatory exposure, token concentration risk, governance controls, operational maturity, and long-term viability. Institutional VCs follow the same playbook.

After advising on $100MM+ in combined raises, we've seen which areas get scrutinized hardest:

Revenue sustainability. Does this token economy generate real revenue, or is it entirely dependent on new participants? Investors have seen enough failed models to be permanently skeptical of tokens without cash flow. Revenue-first design isn't a nice-to-have — it's the filter.

Distribution fairness. Is the team allocation reasonable? Are vesting schedules aligned with long-term incentives? We've seen investors walk away from otherwise solid projects because the team had a 30% allocation with a six-month cliff. That signals the wrong priorities.

Downside scenarios. Nobody cares about your bull case. Investors want Monte Carlo distributions showing what happens when things go wrong. If your model falls apart at 50% of projected adoption, solve that before the fundraise — not during it.

Regulatory awareness. With MiCA in full force and global frameworks tightening, investors need to see compliance baked into the design. A legal opinion and regulatory strategy aren't optional anymore. Compliance-ready token standards like ERC-3643 exist specifically for regulated assets.

Concentration risk. How concentrated is your token supply? Wallet distribution analysis shows whether a small number of addresses control enough supply to destabilize the market. The Tokenomics Evidence Pack standard now includes concentration analysis as a baseline requirement (Source: Blockchain App Factory).

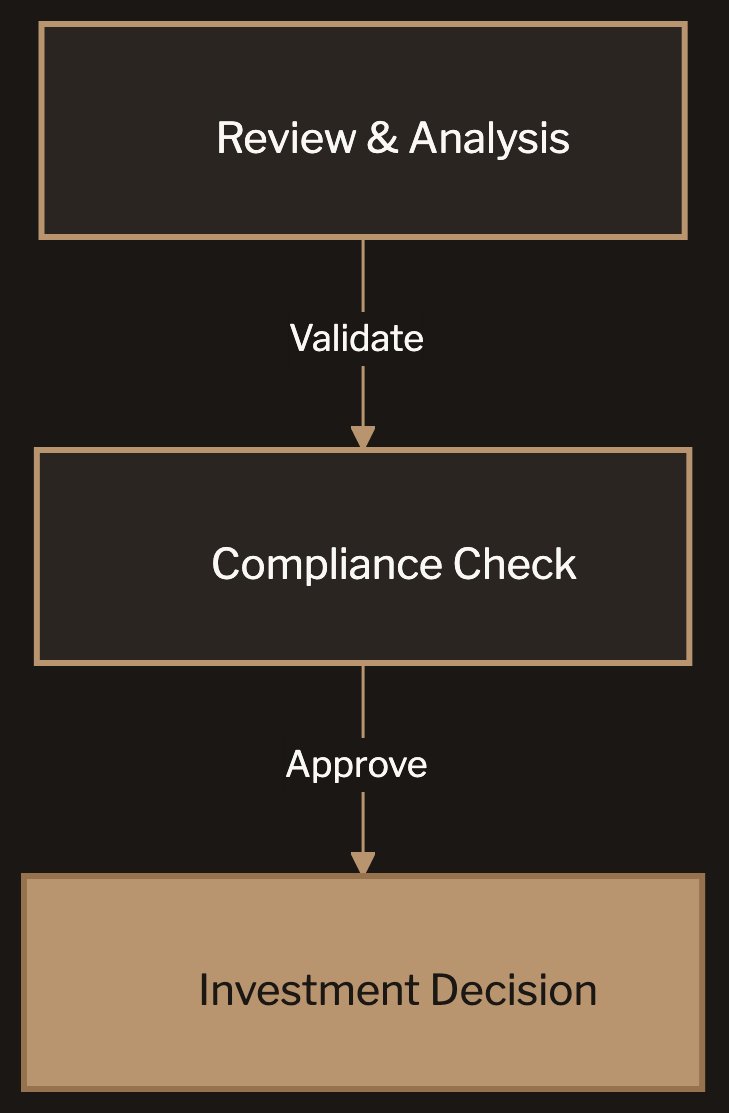

#How Investors Evaluate Your Data Room

The evaluation follows a predictable three-stage pattern. Understanding it helps you prepare documentation that answers questions before they're asked.

Stage 1: Initial screening. A quick scan of your token design, allocations, and high-level financials. This is where most projects get filtered out. If the basics don't hold up — unreasonable allocations, missing vesting detail, no revenue model — investors won't go deeper. Q4 2025 alone saw $8.5 billion invested across 425 deals, an 84% quarter-over-quarter increase in capital (Source: Galaxy Research). With that volume of deal flow, investors are far more selective about which projects make it past the initial gate.

Stage 2: Model analysis. Your financial models get stress-tested. Investors or their analysts pull apart assumptions and run their own scenarios. Clear, well-documented Monte Carlo outputs with stated assumptions and probability distributions accelerate this process dramatically. Messy models with unstated assumptions slow it down and signal poor rigor.

Stage 3: Compliance check. Legal counsel reviews your regulatory framework, token classification, and jurisdictional strategy. This step has become far more rigorous as regulations have matured. Projects that have their compliance documentation organized and accessible pass this stage in days rather than weeks.

Projects that survive all three stages reach the investment decision. The data room is what carries you through each one. Our EcoYield case study shows this evaluation process in practice for real-world asset tokens.

#Building Your Data Room

If you're starting from scratch, this sequence works based on what we've seen across 80+ projects:

Start with token design. You can't model what you haven't designed. Get your mechanism design right first — supply architecture, distribution plan, value accrual mechanics. Nail the fundamentals before moving to projections. If your token design has structural problems, no amount of modeling will fix them.

Build the financial models. With a design in hand, build your Monte Carlo simulations. Start with conservative scenarios and work outward. If the model doesn't survive conservative assumptions, the design needs revision before you proceed. Run at least 10,000 Monte Carlo iterations across your key variables.

Layer in compliance. With solid design and validated models, your legal team can assess the regulatory implications concretely. Doing this last means they're reviewing something substantive, not hypotheticals. They can point to specific mechanisms and distribution parameters when forming their legal opinion.

Package it professionally. Organization matters. Investors review hundreds of projects. A well-structured data room signals that you take this seriously and that your operational execution matches your ambition. Sloppy documentation suggests sloppy execution.

#Common Mistakes We See

After building data rooms for 80+ projects, certain patterns repeat:

Optimistic-only modeling. If your only scenario is the one where everything goes right, investors will build their own downside case — and it'll be worse than what you would have shown them. Run Monte Carlo simulations across thousands of scenarios and present the probability distributions honestly.

Missing vesting detail. "Team tokens vest over 3 years" isn't enough. Show the exact schedule, cliff periods, unlock percentages, and how they compare to market benchmarks. Investors have seen too many teams dump tokens after short vesting periods.

No sensitivity analysis. Which variables drive the most value? Which assumptions matter most? If you haven't done this work, investors will wonder what you're hiding. Sensitivity tables and tornado charts should be standard in every data room.

Treating compliance as an afterthought. Adding "we will work with legal counsel" to your roadmap isn't a compliance strategy. It's a red flag. Compliance needs to be embedded in the token design from day one, not bolted on after the mechanism is finalized.

Copying another project's tokenomics. We've seen projects lift allocation percentages from "successful" tokens without understanding why those numbers worked in a completely different context. Your tokenomics must emerge from your specific business model, revenue dynamics, and market position.

Ignoring concentration risk. If five wallets control 60% of your token supply and your data room doesn't address this, investors will notice. Wallet distribution analysis and concentration metrics need to be front and center alongside your allocation tables.

#Who Needs One?

Not every project needs a full institutional-grade data room on day one. But if any of these apply, it's time to get your house in order:

- You're raising capital from institutional investors or VCs

- Your token involves securities-like characteristics

- You're operating in jurisdictions with evolving token regulations (MiCA, anticipated Clarity Act)

- Your project has complex mechanism design (dual tokens, staking, burn mechanics)

- You need documentation that satisfies legal, technical, and business stakeholders simultaneously

- You're preparing for exchange listings that require tokenomics evidence packs

- You want to compress your fundraise timeline and reduce back-and-forth with investor teams

The standard keeps rising. What was acceptable two years ago doesn't cut it anymore. Institutional investors have more deal flow than ever, and the projects with the strongest documentation packages get funded first. The data room is what separates projects that close rounds from projects that stall in due diligence.

If you're building onchain and need your tokenomics to hold up under scrutiny, book a discovery call. We'll assess your project and tell you whether we're the right fit. Sometimes we're not. We'll tell you that too.